publications

2025

-

SQuARE: Sequential Question Answering Reasoning Engine for Enhanced Chain-of-Thought in Large Language ModelsDaniel Fleischer, Moshe Berchansky, Gad Markovits, and 1 more authorFeb 2025

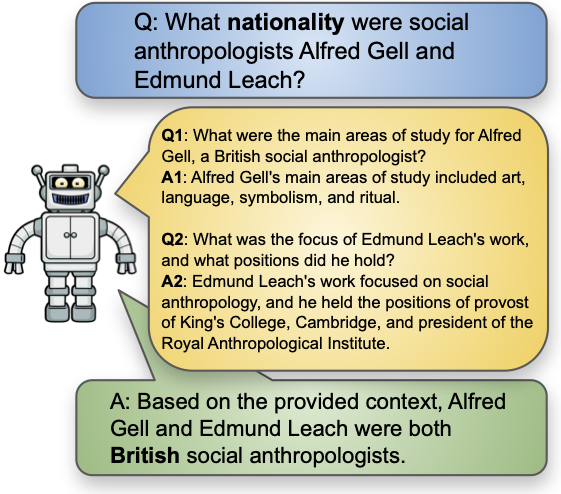

SQuARE: Sequential Question Answering Reasoning Engine for Enhanced Chain-of-Thought in Large Language ModelsDaniel Fleischer, Moshe Berchansky, Gad Markovits, and 1 more authorFeb 2025In the rapidly evolving field of Natural Language Processing, Large Language Models (LLMs) are tasked with increasingly complex reasoning challenges. Traditional methods like chain-of-thought prompting have shown promise but often fall short in fully leveraging a model’s reasoning capabilities. This paper introduces SQuARE (Sequential Question Answering Reasoning Engine), a novel prompting technique designed to improve reasoning through a self-interrogation paradigm. Building upon CoT frameworks, SQuARE prompts models to generate and resolve multiple auxiliary questions before tackling the main query, promoting a more thorough exploration of various aspects of a topic. Our expansive evaluations, conducted with Llama 3 and GPT-4o models across multiple question-answering datasets, demonstrate that SQuARE significantly surpasses traditional CoT prompts and existing rephrase-and-respond methods. By systematically decomposing queries, SQuARE advances LLM capabilities in reasoning tasks. The code is publicly available at https://github.com/IntelLabs/RAG-FiT/tree/square.

- HELMET: How to Evaluate Long-Context Language Models Effectively and ThoroughlyHoward Yen, Tianyu Gao, Minmin Hou, and 5 more authorsMar 2025

Many benchmarks exist for evaluating long-context language models (LCLMs), yet developers often rely on synthetic tasks such as needle-in-a-haystack (NIAH) or an arbitrary subset of tasks. However, it remains unclear whether these benchmarks reflect the diverse downstream applications of LCLMs, and such inconsistencies further complicate model comparison. We investigate the underlying reasons behind these practices and find that existing benchmarks often provide noisy signals due to limited coverage of applications, insufficient context lengths, unreliable metrics, and incompatibility with base models. In this work, we introduce HELMET (How to Evaluate Long-context Models Effectively and Thoroughly), a comprehensive benchmark encompassing seven diverse, application-centric categories. We also address several issues in previous benchmarks by adding controllable lengths up to 128K tokens, model-based evaluation for reliable metrics, and few-shot prompting for robustly evaluating base models. Consequently, we demonstrate that HELMET offers more reliable and consistent rankings of frontier LCLMs. Through a comprehensive study of 59 LCLMs, we find that (1) synthetic tasks like NIAH do not reliably predict downstream performance; (2) the diverse categories in HELMET exhibit distinct trends and low correlations with each other; and (3) while most LCLMs achieve perfect NIAH scores, open-source models significantly lag behind closed ones when tasks require full-context reasoning or following complex instructions – the gap widens as length increases. Finally, we recommend using our RAG tasks for fast model development, as they are easy to run and better predict other downstream performance; ultimately, we advocate for a holistic evaluation across diverse tasks.

2024

- CoTAR: Chain-of-Thought Attribution Reasoning with Multi-level GranularityMoshe Berchansky, Daniel Fleischer, Moshe Wasserblat, and 1 more authorNov 2024

State-of-the-art performance in QA tasks is currently achieved by systems employing Large Language Models (LLMs), however these models tend to hallucinate information in their responses. One approach focuses on enhancing the generation process by incorporating attribution from the given input to the output. However, the challenge of identifying appropriate attributions and verifying their accuracy against a source is a complex task that requires significant improvements in assessing such systems. We introduce an attribution-oriented Chain-of-Thought reasoning method to enhance the accuracy of attributions. This approach focuses the reasoning process on generating an attribution-centric output. Evaluations on two context-enhanced question-answering datasets using GPT-4 demonstrate improved accuracy and correctness of attributions. In addition, the combination of our method with finetuning enhances the response and attribution accuracy of two smaller LLMs, showing their potential to outperform GPT-4 in some cases.

-

RAG Foundry: A Framework for Enhancing LLMs for Retrieval Augmented GenerationDaniel Fleischer, Moshe Berchansky, Moshe Wasserblat, and 1 more authorAug 2024

RAG Foundry: A Framework for Enhancing LLMs for Retrieval Augmented GenerationDaniel Fleischer, Moshe Berchansky, Moshe Wasserblat, and 1 more authorAug 2024Implementing Retrieval-Augmented Generation (RAG) systems is inherently complex, requiring deep understanding of data, use cases, and intricate design decisions. Additionally, evaluating these systems presents significant challenges, necessitating assessment of both retrieval accuracy and generative quality through a multi-faceted approach. We introduce RAG Foundry, an open-source framework for augmenting large language models for RAG use cases. RAG Foundry integrates data creation, training, inference and evaluation into a single workflow, facilitating the creation of data-augmented datasets for training and evaluating large language models in RAG settings. This integration enables rapid prototyping and experimentation with various RAG techniques, allowing users to easily generate datasets and train RAG models using internal or specialized knowledge sources. We demonstrate the framework effectiveness by augmenting and fine-tuning Llama-3 and Phi-3 models with diverse RAG configurations, showcasing consistent improvements across three knowledge-intensive datasets. Code is released as open-source in https://github.com/IntelLabs/RAGFoundry.

2019

- Latent Universal Task-Specific BERTAlon Rozental, Zohar Kelrich, and Daniel FleischerarXiv:1905.06638 [cs, stat], May 2019

This paper describes a language representation model which combines the Bidirectional Encoder Representations from Transformers (BERT) learning mechanism described in Devlin et al. (2018) with a generalization of the Universal Transformer model described in Dehghani et al. (2018). We further improve this model by adding a latent variable that represents the persona and topics of interests of the writer for each training example. We also describe a simple method to improve the usefulness of our language representation for solving problems in a specific domain at the expense of its ability to generalize to other fields. Finally, we release a pre-trained language representation model for social texts that was trained on 100 million tweets.

2018

- Amobee at IEST 2018: Transfer Learning from Language ModelsAlon Rozental, Daniel Fleischer, and Zohar KelrichIn Proceedings of the 9th Workshop on Computational Approaches to Subjectivity, Sentiment and Social Media Analysis, Oct 2018

This paper describes the system developed at Amobee for the WASSA 2018 implicit emotions shared task (IEST). The goal of this task was to predict the emotion expressed by missing words in tweets without an explicit mention of those words. We developed an ensemble system consisting of language models together with LSTM-based networks containing a CNN attention mechanism. Our approach represents a novel use of language models—specifically trained on a large Twitter dataset—to predict and classify emotions. Our system reached 1st place with a macro F1 score of 0.7145.

- Amobee at SemEval-2018 Task 1: GRU Neural Network with a CNN Attention Mechanism for Sentiment ClassificationAlon Rozental and Daniel FleischerIn Proceedings of The 12th International Workshop on Semantic Evaluation, Jun 2018

This paper describes the participation of Amobee in the shared sentiment analysis task at SemEval 2018. We participated in all the English sub-tasks and the Spanish valence tasks. Our system consists of three parts: training task-specific word embeddings, training a model consisting of gated-recurrent-units (GRU) with a convolution neural network (CNN) attention mechanism and training stacking-based ensembles for each of the sub-tasks. Our algorithm reached the 3rd and 1st places in the valence ordinal classification sub-tasks in English and Spanish, respectively.

2017

- Amobee at SemEval-2017 Task 4: Deep Learning System for Sentiment Detection on TwitterAlon Rozental and Daniel FleischerIn Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Aug 2017

This paper describes the Amobee sentiment analysis system, adapted to compete in SemEval 2017 task 4. The system consists of two parts: a supervised training of RNN models based on a Twitter sentiment treebank, and the use of feedforward NN, Naive Bayes and logistic regression classifiers to produce predictions for the different sub-tasks. The algorithm reached the 3rd place on the 5-label classification task (sub-task C).

2015

- IR Dualities in General 3d Supersymmetric SU(N) QCD TheoriesOfer Aharony and Daniel FleischerJournal of High Energy Physics, Feb 2015

In the last twenty years, low-energy (IR) dualities have been found for many pairs of supersymmetric gauge theories with four supercharges, both in four space-time dimensions and in three space-time dimensions. In particular, duals have been found for 3d N=2 supersymmetric QCD theories with gauge group U(N), with F chiral multiplets in the fundamental representation, with F’ chiral multiplets in the anti-fundamental representation, and with Chern-Simons level k, for all values of N, F, F’ and k for which the theory preserves supersymmetry. For SU(N) theories the duals have been found in some cases, such as F=F’ and F’=0, but not in the general case. In this note we find the IR dual for SU(N) SQCD theories with general values of N, F, F’ and a non-zero k, which preserve supersymmetry.